What is Software Defined Networking (SDN)?

In this section, we’ll try to define SDN, provide background information so that the reason why it was created can be understood, give a high-level overview of how SDN works and describe current examples of SDN technologies.

Definition of SDN

There’s no firm definition of Software Defined Networking in the strictest sense. However, it’s a collection of ideas and technologies intended to make the configuration and operation of the network more efficient, easier to manage, and to allow for more innovation either within or on top of the network.

A brief history of SDN

In order to understand the benefits of SDN, why it’s been developed and why it‘s created such interest, we need to understand the history of it – as well as the recent history of networking in general.

SDN arrived in early 2011, as a result of the collaboration between Stanford University and the University of California at Berkeley, with the release of the OpenFlow protocol. The research team were looking for ways that would enable the networking industry to match the rapid advancements in cloud computing, social media and virtualisation. These technologies have one thing in common: dependence on a scalable, easily managed and reliable network.

The limits of traditional networking

While networking has evolved at pace, compared to these other technologies it was falling behind. Networking was based on technologies dating back many decades and, as a result, it was starting to deter the progress of systems that sat on top of it – such as the need to manage each device individually.

For instance, making a change across the network - such as introducing a new service - required manual configuration on every single networking device, making it a highly laborious process. Single centralised management systems, on the other hand, typically manage other systems that sit on top of the network, such as virtualised workloads or applications.

Along with the issue of decentralised management is the proliferation of network-attached devices. For instance, in the case of the Internet of Things (IoT), networks can become overran with devices that wouldn’t typically have been attached. These include a diverse array of devices from environmental sensors and security cameras, through to industrial control systems and autonomous vehicles.

Another example of this proliferation is the idea of Bring Your Own Device (BYOD), where users bring their own devices into work and expect to be able to use these on a potentially sensitive and secured network – sometimes even without the IT department’s consent or awareness. Again, BYOD needs to be carefully regulated.

Many network administrators must now do more with less and this is resulting in networks that are becoming increasingly complex and difficult to manage.

The research teams worked with major companies in the industry, such as Google, Facebook. Microsoft, etc. and formed the Open Networking Foundation (ONF) to continue the work into SDN and OpenFlow.

SDN architecture

An SDN network can typically be split into 3 layers: the application layer, the control layer, and the infrastructure layer. The application layer sits on top of the SDN network and is used to interact with it, providing services such as monitoring, automated fault resolution and advanced reporting. The control layer is where the network is configured. This configuration is then pushed out to the infrastructure layer where the actual networking equipment exists.

At the heart of OpenFlow - and any other SDN network - is the controller. This controller takes the functionality of each individual network component, the switches, routers, etc., which are then used to decide where to send traffic, before centralising it into a single place. Traditional networks, by design, exhibit what is known as ‘per hop’ behaviour. This means that - as traffic is received into each of these devices - it alone decides where to send it, based on the information it’s received from the rest of the network.

The motivation for SDN

This ‘per hop’ behaviour was very much the intention of the original design of the internet, and networking as a whole. Having been initially designed by the US Department of Defence as a means to provide a command and control system that could survive a nuclear strike, the system design is unsurprisingly resilient when outages occur. This is mainly due to the lack of a centralised control system.

Unfortunately, the management of this ‘per hop’ behaviour does not scale well. Logging onto individual devices and making changes may work when you only have a small number of devices to manage. But even in medium-sized organisations, the number of networking devices will often be in the hundreds. When scaling up to central government, large corporate and especially hyper-scale cloud providers such as Google, AWS or Microsoft, the number of devices go from hundreds to thousands. Managing each of these devices at this scale is extremely difficult. Many network engineers and network operators will employ scripting and management systems, but these devices don’t take well to being managed in this way, making automation very difficult, unreliable and expensive.

The centralised OpenFlow controller contains the entire network configuration and controls the forwarding decisions of each networking device. So, the main benefit of SDN is the removal of the need to manage each individual switch, router, firewall or access point. If it’s necessary to introduce a new service, for instance, the new configuration only needs to be completed on the centralised controller, which will then direct the network as per the centralised configuration. This centralised configuration categorises as a policy.

The state of the network then feeds back to the centralised controller and uses this information, along with its configuration, to direct traffic flow through the network. This is often referred to as the ‘control plane’ within networking.

The configuration of the OpenFlow controller or the networking devices is the ‘management plane’. This is the GUI command line that users interact with, or the automated management systems such as the Simple Network Management Protocol (SNMP).

The actual network traffic flow, the data forwarded on the network is referred to as the ‘data plane’. It would exist on the networking devices only, meaning that the traffic doesn’t touch the centralised controller. Instead, its only aware of this traffic so that it can make the appropriate forwarding decisions.

So, within traditional networks, the management, control and data plane all exist within each individual network device. SDN originally intended to remove the management and data plane in the centralised controller, however, this does bring its own issues that we’ll discuss below.

Current SDN solutions

Unfortunately for OpenFlow, while considered a revolutionary networking technology that kickstarted the SDN concept, its centralised nature and lack of implementation by the dominant vendors has meant deployments of this technology are limited.

There’s concerns around the survivability of the network when the control plane is separated from the data plane, where traffic is forwarded. Suppose you have a central data centre that hosts the centralised controllers and this DC becomes isolated from the network, due to a failure on the wide area network for example. No traffic can be forwarded as the networking devices don’t know what to do with it. Essentially, the loss of the centralised controller in OpenFlow results in the network losing its centralised brain and the network will stop forwarding traffic. Traditional networks are like lots of smaller brains that talk to each other, meaning they can be easily designed with no single points of failure.

Since OpenFlow, the major networking vendors - such as Cisco and Juniper - have been developing their own SDN technologies. Many of these take the original idea of a hyper-scalable network with a centralised management, but move the control plane back to the individual devices. This allows the benefits of both worlds. The centralised management model means that changes to the network can take place at scale, but the loss of a centralised controller doesn’t mean that the network stops dead.

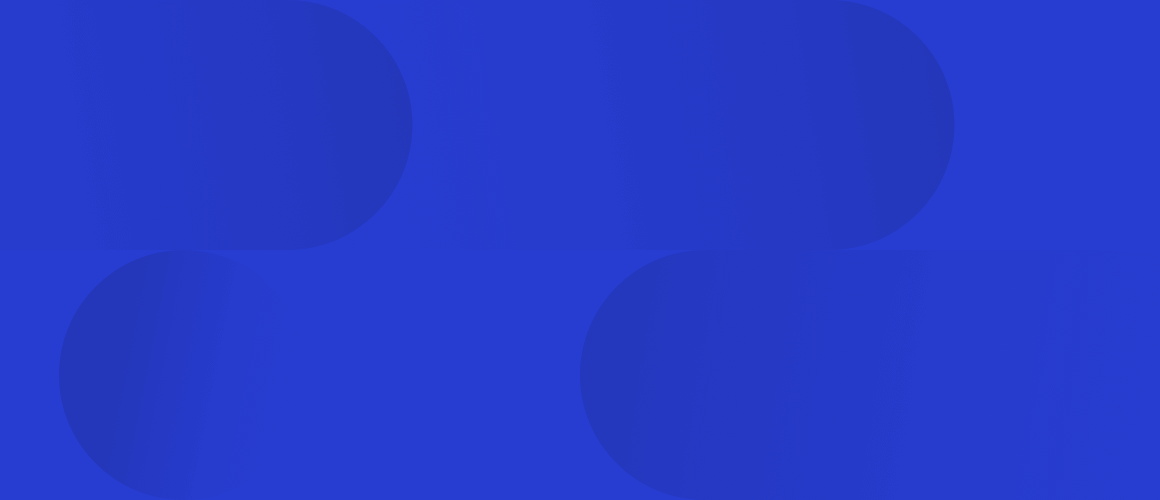

The diagram below provides an example of how SDN is deployed today, using widely adopted and popular Cisco SDN solutions, to provide a complete SDN network:

This diagram shows how SDN can be used across the WAN to securely link various sites, bring users and data centres together, and provide secure, scalable access into public cloud services. Connectivity, routing and security over the WAN for each remote site is managed via the SD-WAN controllers, with the entire data centre and office/campus network being similarly managed and monitored via their own centralised controllers.

What does Software Defined Networking (SDN) actually mean?

Software defined networking is the process of building an entire network using only policies from a centralised management system. In the past, we configured each one of the devices in the network independently of each other. This process was costly and time-consuming. But with software defined networking, we can now quickly describe a design of a network that will support multiple functions, without the need to configure each one of the devices individually. Within SDN, the network itself functions as a single entity, rather than an array of different components with their own independent functions, configurations and logic.

SDN moves the management plane from individual network devices to a centralised controller or controllers. The underlying network equipment still contains the data plane, which physically forwards the traffic down the wires, and often the control plane, where traffic forwarding decisions happen.

Configuration contained within the management plane directs the control plane, making configuration on the centralised controllers, which pushes down to the network equipment. This enables the network equipment to control the data plane and forward the network traffic. The control plane often exists on the network equipment, so that if communication to the centralised controller is lost, the network equipment can continue to make forwarding decisions based on the latest version of the network configuration from the controller.

Types of Software Defined Networks

SDN can be divided into several subcategories:

- Data Centre SDN

- WAN SDN or SD-WAN

- LAN (Campus) SDN

Each solution is specifically designed to meet the needs of each deployment type. SD-WAN is designed to run at scale and allow for flexible WAN deployments over any type of connection. Data Centre SDN is designed to be scalable and flexible to incorporate the needs of different applications hosted in a data centre. LAN or Campus SDN is designed to accommodate lots of different end users and their attached devices.

SDN or IBN which one?

As the technology has matured, the term SDN – which describes the process of programmatically creating a network and its interactions – has given way to the term Intent Based Networking (IBN). IBN better describes the process of using a software controller to create a policy that sends configuration to the network devices – regardless of whether these devices are implemented in hardware or software. While SDN and IBN are effectively the same thing, you can expect to see the term IBN being used in favour of SDN in the future. For the time being though, SDN will continue to be the preferred term.

Network or Fabric?

The term ‘fabric’ comes from Storage Area Networking (SAN) and specifically refers to a storage networking technology known as a Fibre Channel (FC). SANs are used in data centres to allow the storage of large amounts of data, via disks that can be shared by servers over the SAN. Rather than describing a SAN as a network it was described as fabric. All of the parts of an FC SAN interact and are controlled as a single entity. This term has been borrowed for SDN / IBN, as much as it is with an FC SAN. An FC SAN would have been more commonly seen in a data centre describing an ethernet network, as a fabric originated there first. In reality, both the term fabric and network are interchangeable – and both are correct.

Automation and orchestration

One of the immediate network benefits of SDN is the ability to create an overarching policy-based ‘plan’ for how the designer wants the network to interact both with the applications it carries and with other networks. In effect, this is programmatically using software to orchestrate the configuration of each device in the fabric. Therefore, the SDN controller is a fabric orchestration engine, used to automate the deployment of networking configuration.

The rise of infrastructure as code has thrown up an interesting quandary. Seeing as we can automate the deployment of not just the network, but also the applications, supporting compute and its storage, is it better to program everything using the software controllers for each one? Or use a top-level orchestration engine that can program the controllers themselves, negating the need to interact directly with the software controllers?

It seems a more straightforward proposition to use a top-level orchestration engine that interacts with the network, compute, storage, applications and various cloud services. This way, a policy only needs to be defined once. The top-level orchestration engine interprets the policy intent and creates the specific code to pass to each of the lower level software controllers, which are orchestration engines themselves. The most accurate term for this process is Intent Based Infrastructure (IBI), which encompasses the process of defining the entire infrastructure and its interaction, as opposed to defining each part individually.

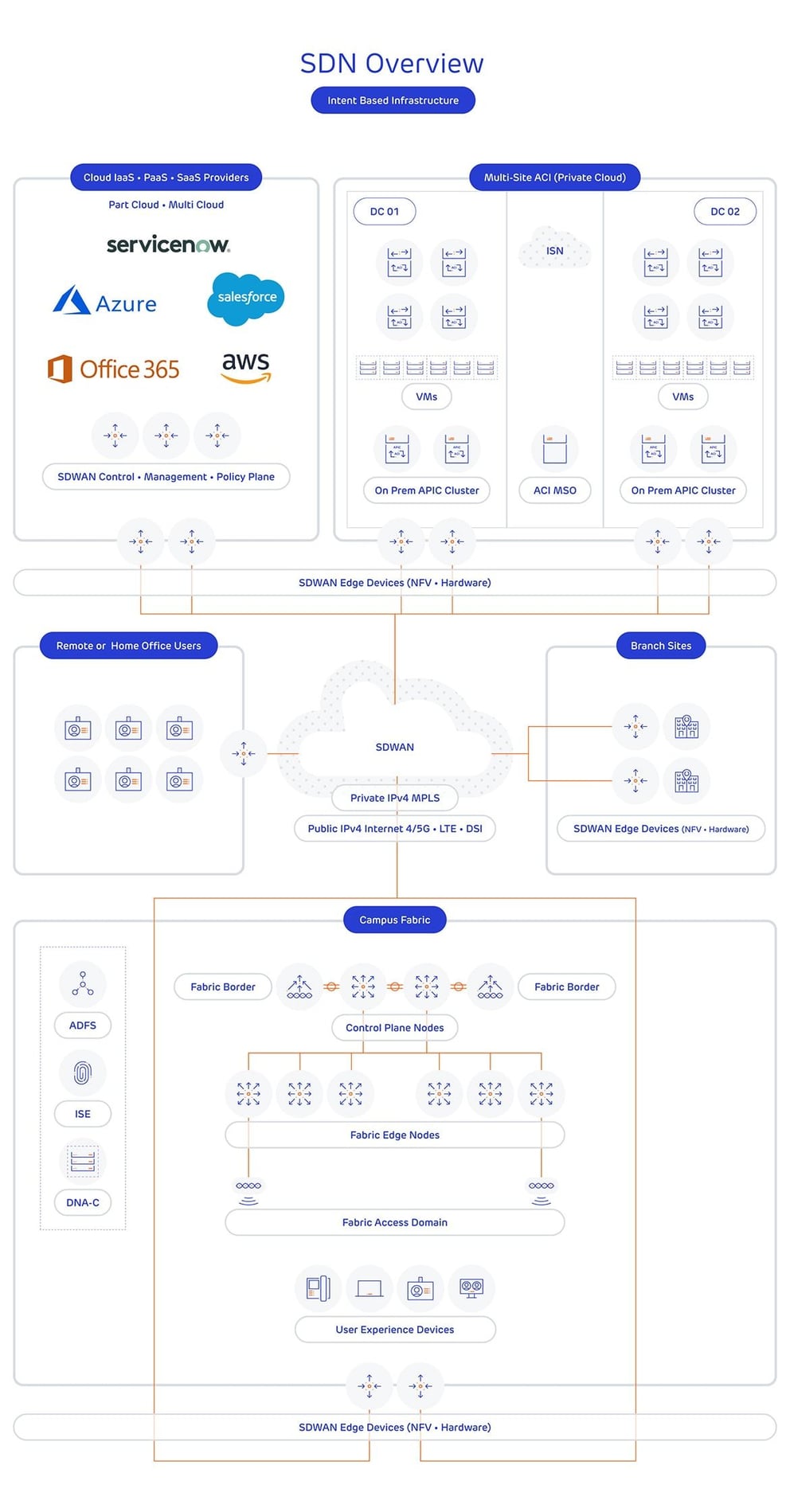

The diagram below shows how orchestration can work by using a centralised orchestration engine to drive configuration throughout the entire network infrastructure, passing instructions down from the central orchestration engine to the various controllers for the different SDN types. This orchestration uses SDN technologies developed over the past few years, but are based on pre-existing technologies used elsewhere in the industry to configure virtual and physical devices or applications en masse:

Application Programming Interfaces (APIs) - an extremely common technology used within software development - are used to push instructions from the orchestration engine to the various centralised controllers. In turn, these controllers interpret, translate and push this configuration down to the networking and server equipment. The controllers use industry standard methods, such as Representational State Transfer (REST), where World Wide Web standards are used to send and receive information or instructions to send these commands. This is a reliable method with many tools and functions already available.

Data Centre SDN

Data centre specific SDN architectures feature software-defined overlays or controllers abstract from the underlying network hardware. It manifests in a policy/management plane for specifically designed hardware or is implemented as a Network Function Virtualisation (NFV), where network devices run as virtualised workloads on a hypervisor offering intent-or policy-based management of the network as a whole. It results in a data centre network that is better aligned with the needs of application workloads through automated (thereby faster, reliable and more efficient) provisioning, programmatic network management, pervasive application-oriented visibility and - where needed - direct integration with cloud orchestration platforms.

Two different examples of data centre SDN are Cisco’s ACI - which uses software to control the deployment of dedicated hardware - and VMware’s NSX-V / NSX-T, where the forwarding and deployment functionality is implemented purely in software. It’s worth noting that, with VMware NSX, switching hardware will usually be required to form the underlay network.

Most data centre SDN technologies will utilise VXLAN at the data plane, as is the case for both NSX and ACI. The differences are usually in how the control plane and management plane functions. VXLAN works by encapsulating the packets sent by the servers, as a network ‘overlay’ and sending them over an ‘underlay network’. The network underlay is typically the SDN infrastructure, so either the physical switches, servers, or both. The main benefit of this is that discreet networks can contain the traffic - for security or multi-tenancy purposes - while allowing these networks to easily span multiple data centres for DR and load balancing purposes. VXLAN also allows for the easy insertion of additional network services - such as traffic inspection, application or server load balancing - without the network and administrative complexities generally associated with these technologies.

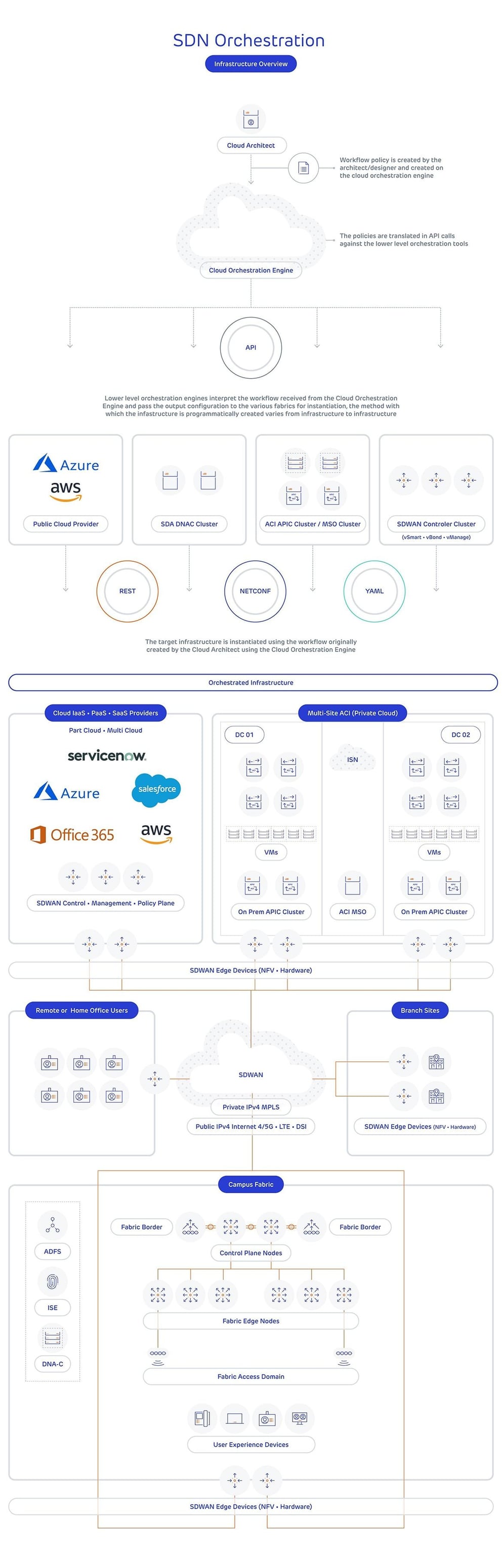

The diagram below shows a typical Cisco ACI deployment:

Two data centres are linked together for resiliency. Because the VXLAN allows servers to migrate seamlessly from one data centre to another, they can be linked by any method with appropriate capacity and latency. The diagram shows that applications – as well as network services such as firewalling and load balancing - can be decoupled from the physical network, allowing the datacentre to be quickly reconfigured to match changes in applications with no interruption to service. How the network behaves is driven by the policy defined on the central controllers and the same policy can be uniform across both data centres, ensuring that - should a failover need to occur - the centralised policy makes sure the configuration between the two DCs is consistent.

Data Centre SDN benefits

Data centre SDN is primarily beneficial due to its ability to programmatically define how the VXLAN overlays control within the fabric itself. A VXLAN fabric can be configured manually within the switching platforms, but this configuration is complex and quite a bit more involved than traditional data centre networking.

Rather than the network blindly transporting the application traffic like in a switched Ethernet network, the data centre SDN technologies are designed around the application itself. This means that how the various parts of an application interact - along with the users who consume them - can be defined at a policy level.

All of the common data centre SDN technologies are able to implement the zero-trust security model in their fabric. Again, this function could be implemented manually, but it would be challenging to manage. The zero-trust model assumes that no access to the application is trusted. As such, users must authenticate themselves whenever they access any resources. This authentication is usually automated and doesn’t involve any user interaction other than the initial centralised user directory login.

Combining this with the ability to deploy and manage a fabric using just policies, means that the time it takes to stand up and manage a complex multitenancy fabric in the data centre reduces vastly.

Alternative data centre SDN technologies

Although many of the leading hardware and software vendors have their solutions for data centre SDN, it’s worth noting that these aren’t always a good fit for an organisation due to size or other constraints. It’s possible to use open source orchestration solutions such as Ansible, Puppet, Chef, OpenStack etc. to configure and deploy overlays on a hypervisor - such as KVM or VMware - that will grant most of the functionality available in vendor-specific products.

The disadvantage of deploying an open source data centre SDN solution would be a lack of vendor support. If the end-user were to encounter issues, such as software bugs or misconfiguration, they’d either need to resolve these issues themselves or pay for a 3rd party to do so. Also, each deployment could be bespoke to each organisation, with little in the way of standardisation; bespoke solutions often result in issues around scalability, stability and are difficult to manage.

WAN SDN or SD-WAN

The driving ideas behind the development of SDN are myriad. It promises to reduce the complexity of statically defined networks, make automating network functions much easier and allow for the simpler provisioning and management of networked resources - everywhere from the data centre to the campus or wide area network. It also provides the technological framework for micro-segmentation.

Micro-segmentation has developed as a notable use case for SDN. As SDN platforms are extended to support multi-cloud environments, they’ll be used to mitigate the inherent complexity of establishing and maintaining network and security policies across businesses. SDN’s role in the move toward private cloud and hybrid cloud adoption seems a natural next step.

For example, Cisco's ACI Anywhere package would have policies configured through Cisco's SDN Application Policy Infrastructure Controller (APIC) using native APIs offered by a public-cloud provider to orchestrate changes within both the private and public cloud environments.

The software-defined wide area network (SD-WAN) is a natural application of SDN that extends the technology over a WAN. While the SDN architecture is typically the underpinning in a data centre or campus, SD-WAN takes it a step further.

At its most basic level, SD-WAN lets an organisation aggregate a variety of network connection types into their WAN, including MPLS, 4/5G LTE and DSL - or any network capable of carrying IP - any internet, or other type of WAN connection. While one site could be on MPLS, one on a 5G and another on a broadband connection, the SD-WAN creates an overlay on top of these various connection types to carry traffic between sites as if all these sites existed on the same WAN. SD-WAN has a centralised controller that can deploy new sites, prioritise traffic and set security policies for the entire network, removing the need to configure complex routing protocols and topologies.

This centralised management software can configure to enable the SD-WAN to react to network conditions in real-time. The SD-WAN can choose the best way to route voice or video traffic over low-latency, high-cost, low-bandwidth links (such as MPLS), with bulky traffic forwarding via cheaper local internet breakouts. Similar policies are applied to cloud traffic - such as Office 365, Salesforce or other cloud based SAAS solutions - where it’s broken out of the local internet connection, rather than being routed via a central office or data centre.

A common misconception with SDN for WAN is that it’s a replacement for MPLS networks. While SD-WAN can be used to replace MPLS, this might not be ideal depending on real-time traffic needs or user expectation of voice or video call quality. SD-WAN can choose a better path if, for example, you have two separate internet connections via two different providers. However, each path will still be subject to packet loss or latency that may happen on the internet. Traffic cannot be prioritised on the internet in the same way as on MPLS or other private WAN solutions.

Suppose high quality calls are the expectation, using MPLS and internet circuits as an underlay for the SD-WAN solution. In that case, savings can yield through the reduction in the number or capacity of MPLS connectivity. Or, if an organisation can accept occasional video call or other real-time application quality issues, then two internet connections per site might be suitable.

One other consideration with circuit types and SD-WAN is that there are options around what is called ‘content delivery networks’. Large cloud-scale providers such as Google and Apple use these networks. They provide a means to circumnavigate points on the internet that are normally contended, such as international or intercontinental links. Use of an SD-WAN solution with access to a content delivery network could remove the need to employ an international - and expensive - private MPLS network. It will also mitigate many of the service quality issues that would be present if just using the internet as the SD-WAN underlay.

SD-WAN is an enterprise WAN access technology and does not intend to replace core network functions. Any software defined network that has an underlay/overlay relationship with the network that carries them and relies upon the underlay for transport should be considered during any design process.

Typically, WAN SDN will utilise IPsec at the data plane, with the overlays carried by IPsec tunnels much in the same way policy-based IPsec or DMPVN is implemented.

SD-WAN's driving principle is to simplify the way big companies turn up new links to branch offices, better manage the utilisation of those links – for data, voice or video – and potentially save money in the process.

The diagram below shows a typical Cisco SD-WAN deployment, where remote sites, data centres and public cloud services connected into a single SD-WAN overlay are controlled via policy on centralised cloud-based controllers:

SD-WAN lets networks route traffic based on centrally managed roles and rules - no matter what the entry and exit points of the traffic are - with full security. For example, if a user in a branch office is working in Office365, SD-WAN can route their traffic directly to the closest cloud data centre for that app, improving network responsiveness for the user and lowering bandwidth costs for the business.

SD-WAN benefits

The benefits of deploying WAN SDN technology have slightly different drivers when compared to data centre SDN. An SD-WAN solution is deployed primarily as a WAN access technology and, in some ways, is the next logical evolutionary from policy-based IPsec or DMVPN based WAN deployments.

SD-WAN allows for the simplified deployment of new network equipment at remote offices or branches, via the zero-touch deployment model. Traditionally, when migrating a WAN, specialist technicians or engineers would be required to attend each remote site to install the new WAN equipment and plug in the new providers’ lines, etc. SD-WAN allows for a device to be provisioned by the vendor. So, when the new device is posted out to the site and plugged in, it pulls its configuration from a central location, removing the need to have expensive, highly trained networking staff travel around the world installing equipment.

As SD-WAN can utilise any underlay technology that can forward IP, it can send traffic over multiple different networking mediums. This forwarding defines as a policy that looks at the actual link itself: what is the Round Trip Delay (RTD) characteristic? What is the total link load, is there a better link to carry this traffic? The immediate benefit of this is to latency sensitive traffic such as voice, which would traditionally require businesses to deploy MPLS to the branch a single access technology.

SD-WAN does not negate the need to have the high quality of the MPLS link. However, as it isn’t the default access method for all traffic, the MPLS provision can be reduced. It’s one of many access technologies that WAN SDN can utilise, depending on the application requirements.

As WAN SDN is an overlay technology, it’s entirely and logically separate from the underlay networks that carry it. As previously discussed, the data plane of SD-WAN - in most cases IPsec - can by carried by any network capable of carrying IP, which means that the SD-WAN is entirely independent of the various service provider that deploy the underlay. This is a potential benefit when migrating to a new service provider, as this can be done with minimal disruption.

LAN SDN

LAN SDN fabrics provide the basic infrastructure for building virtual networks based on policy-based segmentation. Fabric Overlay provides services such as host mobility and enhanced security, which are added to normal switching and routing capabilities.

Typically, LAN SDN implementations will have more in common with data centre SDN than it does with SD-WAN, as most of the concepts that are valid for data centre SDN implement in the same way in LAN SDN.

One of the key features of LAN SDN is the inclusion of wired and wireless access, homogenised into a single seamless access policy. This means that a user’s experience will be the same, regardless of whether they access the network via a wired or wireless connection. The user’s access is now based on their identity, rather than where or how they are accessing the network.

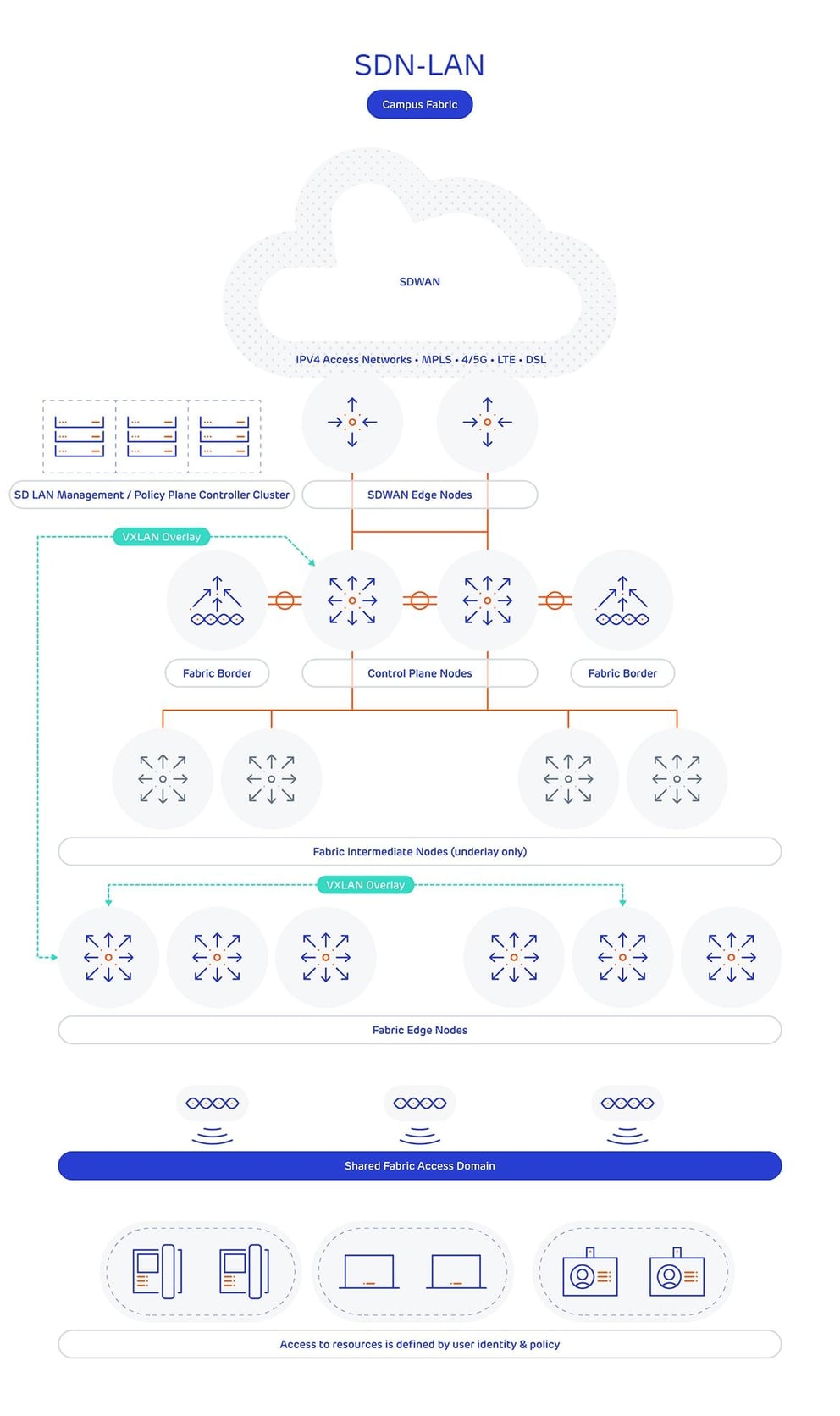

The diagram below shows a typical Cisco SD-LAN (referred to as Software Defined Access or SDA) deployment:

The diagram single large campus, such as a shopping centre, hospital, or office, has a fabric deployed, where - regardless of connection type, either wired or wireless - a consistent end user experience is obtained based on the user’s identity, rather than location. Network overlays are used to further enforce security policy, and again all configuration and monitoring is completed on the centralised controllers.

At the data plane, LAN SDN utilises VXLAN in much the same way data centre SDN does, with the differences being in the control plane. It’s common to see protocols such as Location ID Separation Protocol (LISP) designed to function with VXLAN at the data plane.

LAN SDN benefits

LAN SDN has much more in common with data centre SDN than it does with SD-WAN SDN. Because of this inherent similarity, the drivers for LAN SDN are in some cases the same as data centre SDN.

The campus LAN is the point at which most of the end users interact and consume applications. These, in turn, are delivered by the network infrastructure. Due to this, the campus LAN is the most visible part of the network infrastructure and, by extension, is the part that needs to be deployed and controlled in a standard fashion.

LAN SDN allows a campus fabric to be deployed automatically based upon a pre-defined policy. This has the advantage of reducing the overall time to deploy a campus network – because all of the user access and security polices can be created prior to deploying the campus fabric.

LAN SDN technologies, such as Cisco Software Defined Access (SDA), also allow for virtual networks, similar to tenants in Cisco ACI or other SDN DC solutions. These virtual networks are isolated from each other, so that disparate or high security networks can be easily isolated from each other, while still sharing the same hardware. This massively reduces the costs of hosting sensitive networks, such as security or industrial control systems. Traditionally, such networks would either have to be on physically separate hardware - massively increasing costs for hardware and supporting infrastructure - or be separated via complex and difficult to manage traditional security controls that could easily result in vulnerable systems being inadvertently exposed to external risks.

From an end user perspective, access is identical whether the ingress traffic enters the network via a wired or wireless medium. So, unlike a traditional LAN, management and security policies are not dependent on the access medium or user location. This, in turn, improves security and end user experience.

As with data centre SDN, application visibility is a primary feature within LAN SDN, whereby the same application controls and zero trust model can apply to the campus fabric. Cisco’s SDA product also employs advanced troubleshooting capabilities, using the additional telemetry data provided to the centralised management controller to offer automated and advanced troubleshooting methods.

Lastly, as LAN SDN utilises VXLAN at the data plane, you can have all of the advantages of a layer 2 switched network. Spanning VLANs and layer 2 adjacencies across large campus networks, but with none of the disadvantages such as spanning tree issues or the blocking of resilient links. This combines with all the advantages of a layer 3 routed network, such as fast convergence, reliability, predicable behaviour, broadcast control and ECMP (equal cost multipathing).

Software Defined Networking (SDN) now and in the future

SDN is more of an evolution of how a network is deployed and managed. It has changed from a cutting-edge new protocol developed in academia to the more mature and nuanced product set we see today.

SDN primarily grants the ability to deploy and manage a network without the need to configure each device individually. This provides the modern network administrator with the means to efficiently and effectively manage the proliferation of network connectivity required by modern organisations. Also, it is the case that the specific business requirements drive how this technology deploys. SDN can be used to replace an existing network solution, however, its true power lies in the way it abstracts the complexity of modern networks. Previously, a new service or application could require months of planning, designing and multiple changes. Today, the same new service could only need a single, simple policy change.

As all of the SDN technologies utilise overlays, it can be a simple task to replace existing network deployments with their SDN counterparts and run an existing logical infrastructure inside an overlay. This would allow your business to continue to maintain service whilst building a platform to migrate to. This effectively means that the SDN deployment can act as an intermediate step towards true SDN.

As stated earlier, the term SDN will likely be replaced with Intent Based Networking (IBN) as SDN technologies come into more common use.

Orchestration is the key to infrastructure as code. As the technology evolves further, it may become more efficient to use a single point of orchestration to define the various policies for the network, compute, storage, hypervisor, application and cloud provision, as opposed to creating the same policies on each one in turn.